-by Nathan Randall, Featured Author.

Introduction

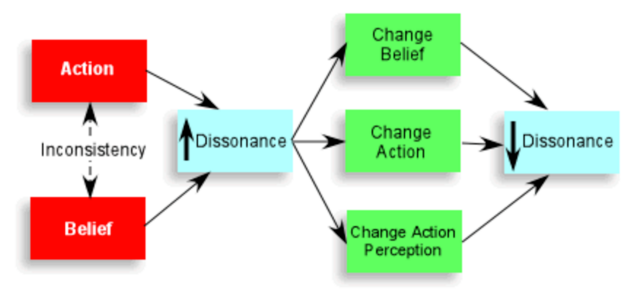

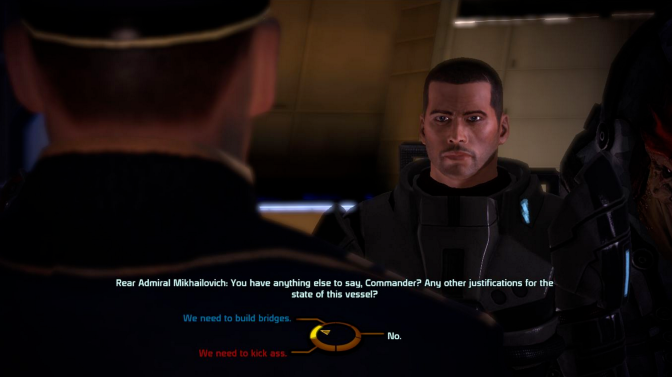

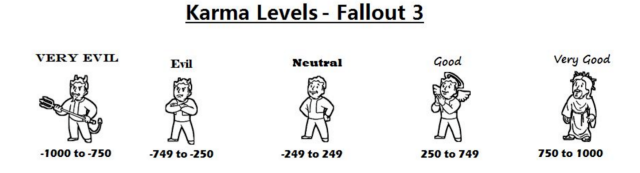

In the first two parts of Nudgy Controls, I defined an important way that a game’s controls can preserve narrative consistency in a game: through “nudges.” A nudge is an instance of player input X, which usually yields output Y, instead yielding output Z, where Y would potentially undermine narrative consistency and Z maintains narrative consistency. In the Part I I defined exactly what a nudge is, and discussed a variety of types of games that maintain narrative consistency through a lack of nudges. In Part II I defined two different types of nudges: player aids and player hindrances. Player aids are instances in which the player is assisted in accomplishing tasks that she potentially could not accomplish without assistance. Player hindrances are instances in which the player’s actions are disrupted, forcing the player to fail where they otherwise likely could have succeeded. All of these ideas are covered in depth in the previous two articles in the series, and so I do not focus on them here. For the remainder of the article I will assume the reader is familiar with the previous two articles, so I would suggest reading those first if you have yet to do so.

In this article I consider the case of The Last Guardian, which pushes the idea of a nudge beyond what our current model can explain. The game is about a young boy (to whom I refer as “the boy” and “the avatar”) who wakes up in a mysterious place away from home, and must escape with the help of a giant beast (Trico) whom he tames throughout the course of the story. Many reviewers, such as IGN and Game Informer, have claimed that this game suffers from a clunky control scheme, and that “platforming as the boy is occasionally spotty, but Trico’s inability to consistently follow your commands drags the experience down more than anything else.” [1]

It is true that the boy often hesitates in situations that surprise the player, leading to failure, and also that Trico is relatively difficult to control. However, I think this highly critical review of the game’s controls is misguided, since both the boy’s and Trico’s behavior can actually be explained by nudgy controls, once we add a few new ideas to the model. The nudgy behavior is a good thing as opposed to a detractor from the game overall because the behavior establishes and reinforces the overall narrative. Criticising The Last Guardian for having frustrating controls while praising its narrative does not make sense because the frustrating controls help form and reinforce the narrative of the game. In this article I explain how we can view the boy’s hesitancy as instances of nudges that are sometimes player hindrances and sometimes player aids. I will also show how the difficulty of directing Trico is the direct result of trying to control a character while there are many nudges taking place. In the end we will see that control schemes should not be judged solely on how “tight” the controls are, but rather on how well the control scheme reinforces or even helps establish the narrative of the game.

The Boy’s Hesitancy

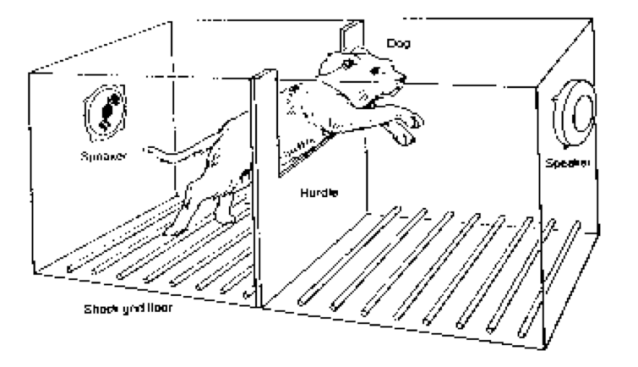

Let’s consider two aspects of the gameplay in The Last Guardian, and how we can make sense of them using nudgy controls. There are two particularly noticeable moments where an input X shifts some usual output Y to a different output Z instead. One occurs when the player attempts to give an input that would ordinarily make the avatar run over a ledge. In these moments, the avatar stops short at the edge. So instead of the expected output of the avatar continuing to run and then running off the ledge occurring, the output is shifted to the avatar stopping at the edge. Importantly, it’s not as if the avatar is incapable of falling. If the player makes the avatar jump off the edge as opposed to running, there is no invisible wall in the game engine that stops the avatar’s movement, and he will fall off the side.

The boy stops himself at a ledge.

The second bit of unexpected behavior occurs when the avatar is falling. Whenever the boy gets close to something stable he can grab, he reaches out to attempt to cease his fall, and succeeds so long as the object is within reach. The player is supposed to be able to stop the boy from doing doing this by holding a particular button, allowing him to instead just continue to fall.

The boy reaches out to grab a ledge as he falls.

But even while the player is holding the button down, the boy will often still grab things close to him while falling, especially if they are very close to him, or a part of Trico he can hold onto (an indication through gameplay of the boy’s trust and care for Trico). In this way, when the player is holding the relevant button, the usual output of continuing to fall is sometimes shifted to grabbing on to something to cease the fall.

But is the nudge of the boy staying away from ledges a player aid or a player hindrance? And what about the nudge of the boy breaking his fall? Upon reflection it becomes apparent that these behaviors sometimes act like player aids and sometimes act like player hindrances.

Initially, one might be tempted to declare that stopping at the edge of a platform is a player aid, since stopping at the edge of a platform would prevent an untimely death in the form of a lethal fall for the boy. But the answer is not so simple, as evidenced by the fact that many reviewers were frustrated by the nudges “messing them up” in some way. Game Informer in particular says that “the imprecise controls make the journey rough.” [2] For example, if the boy gets to a ledge right as the player attempts to jump, then the boy will stop his momentum entirely, messing up the player and frequently leading to accidentally falling off of a ledge as the player frantically adjusts her plan for the situation. Is this not an instance of a player hindrance?

Similarly, ceasing a fall while the player is attempting to prevent that action might initially seem to simply be a player hindrance, since the player did not want that action to occur. If there are many things for the boy to grab during his fall, dropping down can take quite a bit of time and effort if he grabs every ledge, which is potentially very bad for the player when there is some time-limited objective to complete. And if an enemy is approaching the player, then delay in getting to the ground could lead to the enemy capturing the boy. So an instance of the boy breaking his fall when the player is trying to make him fall seems like it must certainly be a hindrance. But what if the player misjudged the distance? Then the boy grabbing a ledge before landing on the unforgiving ground could also potentially save the boy’s life—certainly an example of a player aid. At times, the boy’s caution makes execution of the player’s goals more difficult, even though the same caution often prevents the player from making careless errors.

So it appears that at times these are player aids and at times they are player hindrances. In the rest of the analysis, I will refer to such nudges as mixed nudges. But I get ahead of myself, as there is still one more important aspect to consider before declaring that these are nudges. I must show that they preserve narrative consistency in some way. In order to do so I will introduce one more idea into our model, which I will term avatar perspective.

Avatar Perspective and Mixed Nudges

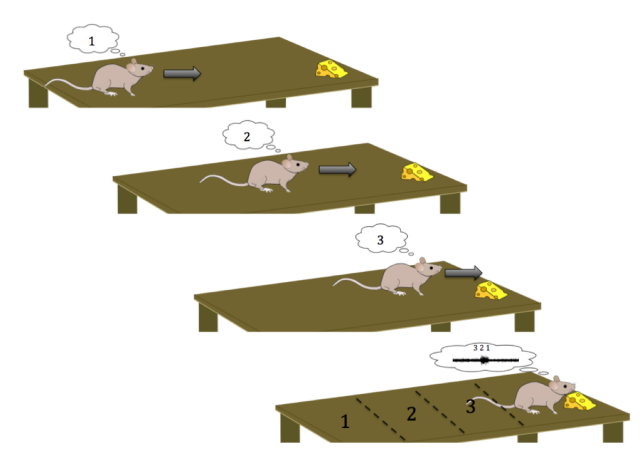

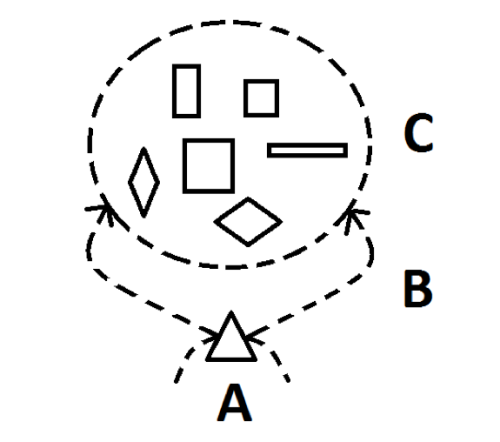

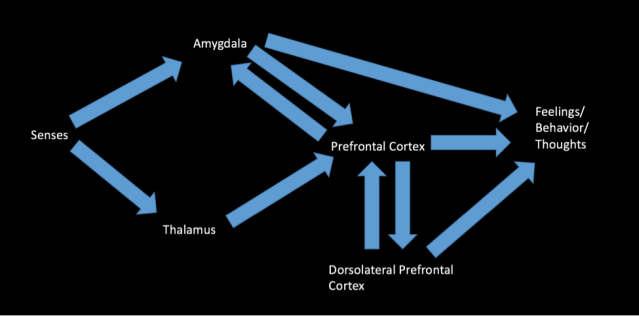

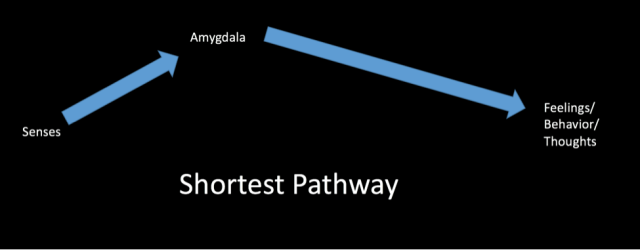

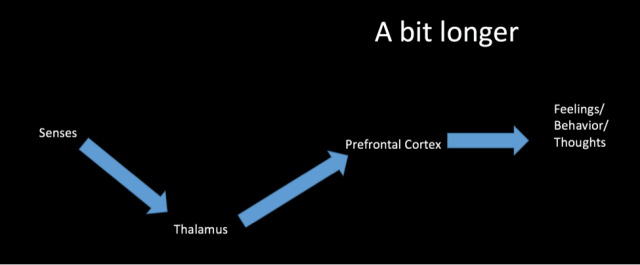

Just as the player has the capacity for perception, so too does the avatar within the fiction of a game. [3] The ability to perceive gives rise to a consistent way of viewing what is perceived that is unique to the individual because every person has a unique set of perceptions. I will call these consistent ways of viewing perceptions perspectives. One aspect of a perspective is someone with a given perspective will view certain things as belonging to the same category, such as things that square-shaped, certain things that are scary or not scary, or certain actions being moral or immoral. There are a nearly infinite number of possible categories, and exactly which items make up a particular category. Players and avatars all have the capacity for perception, and thus they all have a unique perspective, and thus unique ways of categorizing what they perceive. This includes the boy in The Last Guardian, whose actions in response to player input reveal various aspects of his perspective.

In general, the player and the avatar’s perspectives will not align with each other, simply because perception is unique to an individual. But the amount that the perspectives differ is not consistent: the player and the avatar may have very similar perspectives, but they may also have incredibly different perspectives. The way in which perspectives differ is not consistent, either. The avatar may lack a moral compass and have no issue with the murdering of children, even though most players view such an action to be repugnant. It’s possible to have a player that is color blind and an avatar that is not. And lest you think that vast differences in player and avatar perspectives are uncommon, consider any game with a third-person camera, in which the visual perception of the player and the avatar differs greatly just because of an offset in camera placement within the game engine.

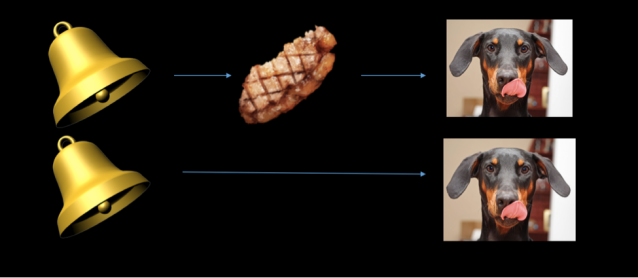

Differences in perceptions and ensuing perspectives between the player and the avatar can be crucial in analyzing mixed nudges. The relevant difference in perspective in The Last Guardian has to do with which sets of objects are viewed as being within the same category. There are many possible categories to consider. For instance, let’s consider the category of corgis that look the same to an individual. For the sake of the example let’s say that I am not familiar with corgis, and that you, the reader, are. In that case, most corgis will look alike to me, even though you’d be able to discriminate between the dogs with relative ease. A similar situation arises between the player and the avatar in The Last Guardian.

Above is how I see four corgis versus how you see four corgis. Notice that to me, all the dogs are look the same, whereas to you, each dog looks at least slightly different.

Specifically, there are many situations that the avatar of The Last Guardian sees as belonging to the category of “situations that are dangerous for the boy” that the player does not see as belonging to that category. The avatar has very simple perceptive rules in this regard: all situations of falling and being close to a stable object to grab onto are dangerous and so demand the same response. Likewise, all situations of running toward a ledge are dangerous and so demand the same response. The player, in contrast, likely does not see all of these situations as belonging to the same category. Specifically, when the avatar is already close to the ground upon starting to fall, the player would not see this as a dangerous situation for the avatar, even though the avatar would see it as dangerous. And when the avatar is running toward a ledge and the player is preparing to make the avatar jump at the ledge, the player likely does not consider this situation to be as dangerous as the avatar considers it to be.

The existence of nudges in conjunction with avatar perspective ends up being surprisingly rich in its ability to endow a character in a narrative with clear desires. The consistent way that the avatar acts in response to situations she views as belonging to the same relevant category imply that there is some consistent desire that the avatar is acting upon. These desires form the basis of personality traits. The example of the mixed nudges in The Last Guardian serve as clear examples of the creation of personality from avatar perspective.

The boy views a set of situations as equivalently dangerous. These situations are any in which he is running toward a ledge, and any situations in which he is falling and has something he can grab onto to cease his fall. From these situations we learn that the boy has a desire to avoid injury and death—a fairly sensible desire in general, but also one that makes a lot of sense for a young boy in the dangerous situations he finds himself in. Sometimes this desire is helpful for the boy in that he avoids dangerous situations, and other times the same desire leads to distraction and clumsiness that makes it harder to achieve his goals.

The boy climbing over a ledge.

The mixed nudges in The Last Guardian preserve the consistency of the boy being young and afraid. By having the nudges sometimes be player aids, the player can see that the nudges are not present to show that the boy is clumsy, and by having the nudges sometimes be player hindrances, the player learns that the aids do not arise out of training or a high degree of innate competence. Rather, the mixed nudges preserve the character of the boy as being someone trying not to hurt himself while doing dangerous things, but not always reading the situation correctly because he is young and inexperienced. His category of situations that are dangerous is too broad.

By taking into account avatar perspective, we can explain how what initially seem to be fairly clunky controls are actually instances of nudges that are sometimes player aids and sometimes player hindrances. These mixed nudges do a lot of work in preserving the consistency of the boy being young, afraid, and in a dangerous situation that he does not always navigate perfectly or elegantly, even with the help of a very experienced or skillful player, even though he will not be goaded into reckless action by an incompetent or non-cooperative player. [4] This suggests that the reviews mentioned at the beginning of the article were misguided in criticizing The Last Guardian for the clunky control scheme for the boy, since the controls in fact make the character of the boy more vivid.

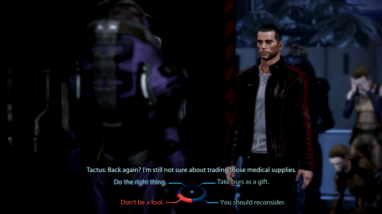

Player-Controlled Entities

Reviewers who criticized The Last Guardian spoke not only of difficulty controlling the boy, but of difficulty controlling Trico as well. Polygon reviewer Philip Kollar points out that Trico’s behavior “makes for a realistic depiction of my favorite house pet [a cat], but it’s terrible gameplay.” So at this point I will switch gears to discuss the other half of the duo featured in The Last Guardian. I disagree with Kollar’s claim that Trico’s behavior is terrible gameplay: the gameplay may be frustrating, but that does not make it terrible. The gameplay is actually highly effective at building the character of Trico. The difficulty of controlling Trico can be explained by the presence of a large number of mixed nudges in the actions of Trico that actually reinforce Trico’s character rather than detract from it.

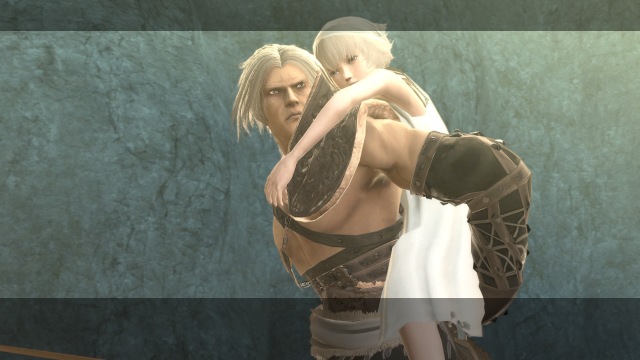

Note that in order for this analysis to work we may need to consider nudges that apply to things the player has control over generally, rather than specifically avatars. While Trico is not necessarily an avatar, he is a character in the game over which the player has at least a degree of control.

Intuitively there is a distinction between avatars, defined roughly as the entity that the player controls as an entry point into a game, and entities in the game that the player controls through the avatar, which belong to a larger category of player-controlled entities. [5] While most players would likely disagree with the claim that Trico is the player’s avatar, he is definitely a player-controlled entity.

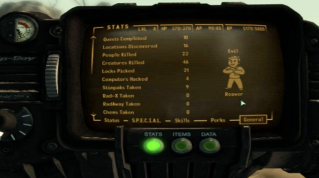

There are many games that have a character that is not necessarily an avatar, but is definitely controlled by the player through the intermediary of the avatar. Super Smash Brothers is one notable example, since it has two examples of playable “characters” that consist of multiple entities. One of these is the Ice Climbers: the player directly controls Popo, canonically the climber wearing blue; Nana, canonically the climber wearing pink, does the same actions as the climber wearing blue, but slightly delayed in time. The other is Rosalina and Luma, a space princess and a sentient, star-shaped creature that she commands, respectively. These two can move as a unit or separate themselves and perform the same actions while standing apart from each other.

Rosalina and Luma.

The Ice Climbers in action. The one in blue is Popo and the one in pink is Nana.

In the case of the Ice Climbers, what narratively justifies this gameplay is the tight bond of friendship and trust between the climbers. The two characters have climbed dangerous mountains together, and have presumably gotten to the point where they can communicate so quickly and effectively that it is as if they were reading each other’s minds, and so can coordinate actions in a way that initially seems to be impossible. In the case of Rosalina and Luma, Rosalina is casting spells on Luma that get him to take the same actions as Rosalina instantaneously.

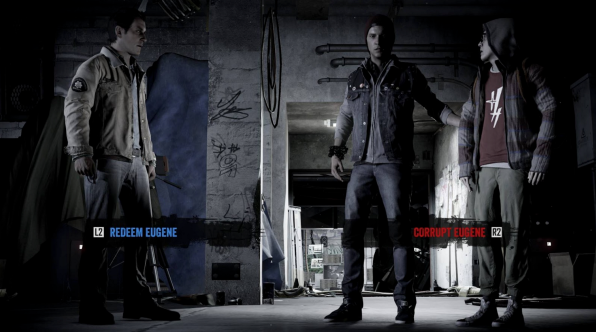

I will define the unit of two player-controlled entities where one is definitely an avatar of the player and the other is an entity being controlled by the player through the avatar to be a partnership. I will mostly not be focusing on the entity that is definitely an avatar (which I will just call the avatar), because we have already discussed that entity in detail in this series. Instead our attention will be on the other entity in the partnership (which I will call the partner). In general across the examples we will look at, the control players have over the avatar when also controlling the partner does not contain nudges. This is not necessarily a rule that must be followed, but examples of that sort would be very difficult to analyze, and so we will not be considering them in the scope of this article.

Within most game narratives, if a partnership exists, there is some dynamic relationship between the characters in the partnership. It turns out that this relationship can be defined and enforced by gameplay. This will prove to be a crucial idea when considering the example of Trico in The Last Guardian. So let’s consider more generally how gameplay can enforce various aspects about the relationship between the partners in a partnership. In this section we will consider two relational aspects in particular, both of which will be important in analyzing Trico’s behavior: how well an avatar and partner are able to communicate with each other, and whether a partner intends to cooperate with an avatar.

The gameplay for the Ice Climbers describes both of those relational aspects quite simply. The nearly simultaneous actions of the climbers show how these two characters can communicate quickly and effectively with ease. And since the climbers never act antagonistically toward each other, they clearly determined long ago that they intend to cooperate with each other.

The Ice Climbers are just one example, however. There is no reason that a partner needs to be able to communicate well with the avatar or intend to cooperate with the avatar. Both of these factors are at play in the example of Trico. Let’s consider two examples of partners that speak in important ways to how the avatar and partner in in The Last Guardian do or do not communicate.

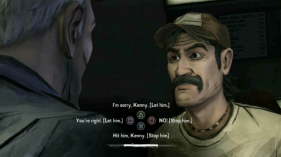

For our first example, let’s say that a developer would like to create a game with a partner who is a femme fatale. While she is incredibly sharp and picks up on everything that the player commands her to do, sometimes she acts mischievously based on a set of intentions that the player is unaware of. Through gameplay that has her usually be responsive to player input except in certain circumstances where she acts against player direction, the developer could maintain this sort of characterization very effectively in the narrative. So the extent to which a partner is responsive to player input can give insight into the level of cooperation between the avatar and the partner. Note again that this analysis only works if the relevant gameplay is not nudgy in terms of controlling the avatar as opposed to the partner.

One particular manifestation of the archetype of femme fatale is Kainé from Nier. She sometimes assists Nier, the titular character and player’s avatar, in various combat situations. It might surprise some people who have played the game, but it is in fact possible to give Kainé a small set of specific commands.

The menu screen for issuing commands to Kainé (1/2).

The menu screen for issuing commands to Kainé (2/2).

However, Kainé’s behavior does not change much when issued these commands, hence why few people use the feature at all. Even though she is clearly aware of the command issued to her, she apparently has no desire to heed the requests made of her, evidenced by the fact that she literally does not act upon the requests. This is all fitting to her character as a perpetually angry, foul-mouthed warrior.

Kainé killing a monster, but probably not listening to the player.

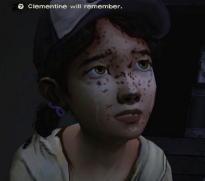

Now consider a game where the avatar’s partner is someone who is only slightly conversant in the language that the avatar speaks. In this case, that partner, who is player controlled, is slow to respond to player input, or doesn’t respond at all, simply because that message cannot be efficiently communicated, if at all. Unlike the previous example, there is no malevolence or masking of intentions: the gameplay speaks specifically to the inability of these two characters to communicate with one another. A very frustrating example of this is Hey You, Pikachu, a 1998 game in which the player communicates with Pikachu on-screen, attempting (almost always unsuccessfully) to get Pikachu to perform a variety of actions.

Pikachu almost certainly misinterpreting the player’s input.

While Pikachu is intuitively does not appear to be the player’s avatar, because the avatar is apparently the character from whose perspective we are seeing Pikachu, Pikachu certainly is controllable by the player. [6] [7] But the player usually has such difficulty communicating with Pikachu that it is as if Pikachu were not controllable at all. On the level of literary criticism, the issue with Hey You, Pikachu is that Pikachu is so difficult to communicate with that it appears as if he is actually very stupid, as opposed to simply being an animal. This shows the power of gameplay in characterizing a player-controlled entity.

Moving forward I will use these two examples of inter-partner communication to think about Trico’s response to the player’s actions through the intermediary of the avatar. The lack of ability of communicate generally, and not intending to cooperate even if the message is understood, are important aspects of the relationship between boy and his beast that the gameplay highlights and reinforces.

Trico’s Behavior

We now have the groundwork necessary to analyze how Trico’s behavior preserves the narrative consistency in The Last Guardian. To see how this is the case, I will first define one of Trico’s behaviors in question. From there I will show how Trico’s behavior can be seen as mixed nudges and that those mixed nudges arise from Trico’s perspective differing from the player’s in one of the two ways mentioned in the previous section. Trico either does not understand the message, or Trico has an intention that differs from that of the player’s.

One primary way of communicating with Trico is to give him a visual cue of where to move. As anyone who’s played The Last Guardian knows, getting Trico to actually do this is often a long and frustrating process, as he often does not notice what the player is asking him to do, does not understand, or just refuses to do it. This leads to a situation where the player input can yield a wide variety of responses from Trico, some of which help the player, some of which are neutral, and the rest of which hinder the player in some way.

In this way, we can see that the output-shifting required for a nudge exists: the player input can yield any of several outputs from Trico. I remind the reader that the gameplay for controlling the avatar in these circumstances of directing Trico is nudgeless, and so we do not need to worry about compound nudges. Since the nudges can be hindrances the player in some circumstances and be helpful in others, the nudges are in fact mixed nudges. But what of preservation of narrative consistency? What does this gameplay accomplish in terms of that?

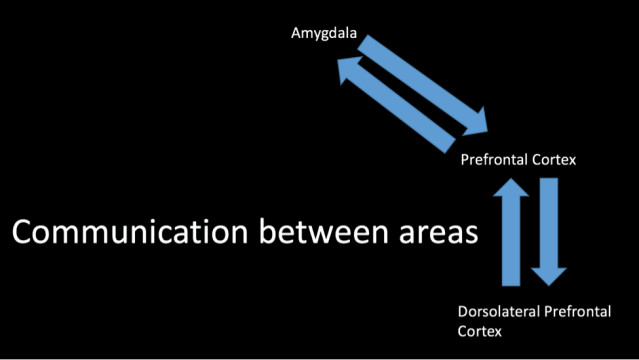

Interpreting Trico’s Behavior

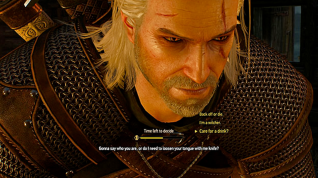

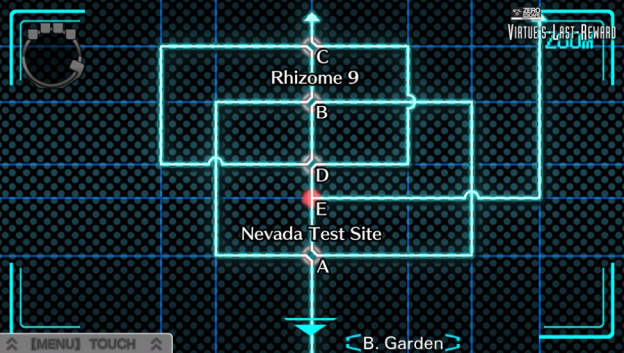

Since Trico is a sentient being, he, like the player and the avatar, has a unique perspective. The problem is that since Trico is a beast, his perspective frequently differs from that of the player, who is human. Trico’s larger size means that he looks at the navigation of physical space differently from the smaller human avatar. There are certain things out in the world that scare Trico, especially stained glass images of eyes, that do not have the same impact on the player or the avatar.

The stained glass eyes that frighten Trico.

And Trico is uncontrollably attracted to certain scents that do not seem to have any impact on the avatar. This is all evidence for Trico having a consistent perspective based on his non-human sense modalities.

The difficulty of communicating with Trico arises from the inherent difficulty of bridging the divide between avatar and partner in terms of language and species, such that the player can communicate what she wants to Trico through the avatar, and the player can understand what Trico needs in return. When the player gives a command to Trico, if he sees it and understands it, Trico then responds by performing the desired action, and we can view his behavior as a player aid. If Trico does not see the command or is unable to understand, his lack of action ends up being a player hindrance. The mixed nudges present in this case preserve the narrative that Trico does not have an easy communication channel with the boy at the start of the game, and may not be able to understand what he is being asked to do. This is similar to the example of Pikachu from Hey You, Pikachu: he often literally does not understand the commands he is given, and thus cannot act upon them in a logical way. The mixed nudges further drive this lack of ability to communicate expediently home.

Trico not understanding his commands is not the only source of nudges in his behavior, however. There are times when Trico understands what the player is asking him to do, but does not want to perform the action, similar to Kainé’s reactions to commands in Nier. One clear example of this is when the player is asking Trico to jump into the water. It takes a while to goad Trico into jumping in the water in the first place, and he is quick to get out whenever given the chance. Apparently he does not like getting wet. These player hindrances—moments when Trico does not quickly perform an action even when he understands it, because he has different intentions and desires—preserve the narrative that Trico is a being with feelings and desires, as opposed to just a robot that processes inputs from the player and acts if he understands the command. The usual output of Trico performing the output when he understands it shifts to Trico (at least temporarily) not performing that action. Trico, like Kainé, thinks and feels for himself, and that comes out in the gameplay.

“Training” and the Disappearance of Hindrances and Mixed Nudges

Over the course of the game, the frequency of moments in which Trico stares dumbly back at the player lessens. The net impact of this is that as the game progresses, many mixed nudges get replaced by player aids, as commanding Trico to do certain tasks gets easier and easier. This change in the nature of the nudges in the game over time preserves the narrative that Trico is being trained and forming a bond of friendship with the boy. As these two characters work together more and more, it becomes easier to communicate quickly and effectively. The boy has taken on the role of an animal trainer and created a capacity for communication with an animal with whom most people are unable to communicate.

Some of the player hindrances start to disappear toward the end of the game as well. There is a moment in particular when the boy is in danger of being captured by moving statues where Trico overcomes his fear of the stained glass eyes to jump in to destroy the statues and save the boy. As these hindrances disappear, it preserves the narrative that Trico cares for the boy and is willing to overcome fear and danger in order to save the boy, just as the boy overcomes his own fears and dangerous situations to save Trico. The existence of a vast number of mixed nudges early in the game that gradually turn into mostly player aids (or at least mixed nudges that are aids far more often than hindrances) over time displays the growing bond between these two characters. The game succeeds at displaying the birth of this friendship through of the nudges in the gameplay as opposed to dialogue or cut-scenes, which are few and far-between in the game.

Trico and the boy connecting with each other.

Responding to Critical Review

Game Informer complains that “Trico’s inability to consistently follow your commands drags the experience down more than anything else,” yet they also say that “The Last Guardian forges a connection between the player and Trico unlike anything else in gaming.” Now we can understand that Trico’s inability to consistently follow commands is actually a crucial part of how that special connection gets forged. While it is tempting to view the inconsistencies in the control scheme as factors that make The Last Guardian worse, it actually is the case that the controls do work to develop the relationship between the boy and his beast. [8] The nudges present in the boy’s gameplay reinforce his status as a young child, and the nudges present in controlling Trico reinforce his status as a non-human creature. It is not as The Verge author Andrew Webster says: “Often [the controls] don’t work as they should, and you’ll need to push through some terribly frustrating moments to experience everything The Last Guardian has to offer.” Rather, the terribly frustrating moments are an essential part of what the game has to offer in creating the relationship between the boy and Trico.

Although it may be initially tempting to criticize a game because of “clunky” controls, I hope that this analysis has shown that it’s worth taking pause to consider what a game’s control scheme may be saying about the story of the game itself. While it is true that at times controlling the boy and Trico is difficult in surprising ways, these aspects of the gameplay carry weight in preserving the narrative consistency of the game. The mixed nudges present in controlling the boy drive home his attempt to be cautious, even though his youth sometimes leads him to misread situations. The wide variety of nudges present in controlling Trico drives home his status as a non-human animal, and the change in types of nudges over time shows how he forms a strong bond and ability to communicate with the boy. Kotaku reviewer Mike Fahey sums it up well by saying “The unpredictable AI can make for some frustrating moments, but that frustration only enhances the illusion that this strange cat-beast is a living thing. I am not irritated with a video game. I am irritated with my large feathered friend.” [9] The game uses nudges in a way that is poignant and subtle to develop the relationship within the partnership that the game features.

Directions for Future Research

We’ve covered a lot of ground in these articles. Starting from defining nudgy gameplay and progressing through games that don’t need nudges to games with player aids and hindrances, and then on to games with mixed nudges based on avatar perspective, we’ve seen a wide variety of ways that games have dealt with the variable that is the player in ways that preserve their narratives. My hope is that the reader uses this way of thinking to critically analyze the games that they play, including ones that I did not discuss in this article specifically, and that these articles can serve as a starting point for further analysis.

To that end, there are many topics I brought up in these articles that I did not have space or time to comment on to the degree that is deserved. I think it pertinent to bring up a few of those topics and pose questions as a place to leave the reader at the end of this work. Hopefully one of these questions will spark a reader’s thinking and they will think of some way to explain some aspect of the stories in video games that at this point remains elusive.

One topic that I hinted at but did not dive into for lack of space is the issue of the definition of ‘avatar’. While the term is frequently used among game fans and analysts alike, the word does not seem to have a consistent definition. So what exactly is the avatar? How does the avatar differ from other player-controlled entities? WaTF founder Aaron Suduiko has some foundational thoughts on these questions in the form of his senior thesis, which is an ontology of single-player video games. But other than that work, the question at this point has no clear answer.

Another open topic is the topic of multiplayer generally, something I discussed in Part I of this series in the context of multiplayer skill tournaments, and how games of that sort are better off remaining nudgeless. One challenge in writing that section was identifying exactly what the narrative of a multiplayer game is. Finding the narrative within a multiplayer game is not as easy as it might initially appear. Consider, for example, a group of six players cooperatively playing a Destiny mission. While there is a story presented by the game in terms of voice lines and cut scenes, there is also a narrative being weaved within the conversation between the players, which need not actually bear any relation to the cut scenes and voice lines. Which of these is the dominant narrative? Or do they coexist? How do you analyze a narrative that has multiple agents influencing the narrative’s events? This is massively under-explored territory, even here on With a Terrible Fate.

Nudgy Controls Conclusion

Participatory storytelling has a unique challenge to handle: how does a storyteller convey a cohesive narrative to an audience that has a hand the instantiation of that narrative? We can all imagine an audience member in some participatory theater who gets bored and rolls his eyes at a dramatic moment in the show, critically undermining believability of the narrative being presented. This sort of challenge is a constant issue for writers of stories for games. How do you make sense of the role of the player in your story? What if the narrative requires skill on the part of the player that the player does not possess? What if your player is too skillful in a moment when failure is expected? What if your player’s desire is to try and break the narrative consistency of your game through their actions? In general, how do you handle the variable that is the player, who is importantly external to your game?

Sometimes the most effective technique is to nudge the player’s input toward a more narratively appropriate output in the controls themselves. We’ve seen how doing this can make a character appropriately badass regardless of player skill, and how it can be used to make vivid the critical condition of a dying character. But beyond that we’ve seen an even more subtle and fascinating capacity that these nudges possess. Nudgy controls can create and reinforce character traits and relationships, to the extent that a game like The Last Guardian needs little exposition other than just the gameplay itself.

It’s time to stop judging the control scheme of a game solely on how “tight” the controls are. Sometimes a game’s controls are difficult, or frustrating, or even too easy, in a way that reinforces the narrative of a game. Gameplay and narrative are inseparable. Let’s start judging control schemes based on how well they work with the narrative, rather than in the superficial ways we have been up until now.

Nathan Randall is a featured author at With a Terrible Fate. Check out his bio to learn more.

[3] For this section, I stipulate that the avatar is a sentient being, for sake of simplicity. This is not actually a requirement for the analysis to work, but it makes the argument easier to follow.

[4] While mixed nudges that arise from personality traits and perspectives, such as the ones described in the previous section, are deep and rich, this is not the only possible manifestation of mixed nudges. To see this, consider the following case. One could imagine a science fiction game in which the avatar has a “quantum fuse box” implanted into his brain. The device works in the following way: half of the times it is activated it makes the avatar successful at whatever he attempts to do, aiding the player tremendously, and half of the time it forces the avatar to fail at whatever he attempts to do, hindering the player. The activation of the device occurs randomly, and the output of the device is random.

This hypothetical game definitely has nudges whenever the device is activated, in that any input on the part of the player is shifted, and the nudges preserve the narrative consistency of the existence and effectiveness of the quantum fuse box. But the nudges are player aids half of the time and player hindrances half the time, meaning that they are mixed nudges. So there is no requirement for mixed nudges to arise out of avatar perspective. Thanks to Aaron Suduiko for proposing the quantum fuse box example.

[5] Player-controlled entities and its subset, avatars, actually end up being incredibly rich and complicated territory to consider. All avatars are player-controlled entities, but it’s not clear where the dividing line between the categories is. What differentiates a player-controlled entity from an avatar? Are any of the individual units in a game like Halo Wars avatars? In a role-playing game in which the player controls an entire party of characters, is each character just a player-controlled entity, or an avatar as well? Are all of the characters avatars of the player? Is one character the player’s avatar and the rest just player-controlled entities? The answers here are not clear, and so for the most part I will leave these questions unanswered, as the answers are likely long and tangential to the topic at hand. This leaves open the possibility that player-controlled entities and avatars are in fact the same set of entities, making one of the two terms redundant. Intuitively this does not seem to be the case, as it seems that some things are avatars and others are simply player-controlled.

[6] I leave open the possibility that Pikachu is the player’s avatar, but common intuition from players is that while he is controllable by the player, he is not the player’s avatar.

[7] Note how even in first-person, in which we cannot see a manifestation of our character on-screen, we still think of the character from whose eyes we are seeing to be the “avatar.” There can be no figure on screen and yet we can refer felicitously to an avatar being present. This is odd and warrants further analysis.

[8] Of course, my analysis of nudges in The Last Guardian doesn’t excuse all of its control issues. I readily admit that controlling the camera in The Last Guardian is pointlessly difficult and that the game would have been better with tighter camera control.

Laila will be exploring how horror storytelling in video games fits into broader, long-standing traditions of horror in folklore, mythology, and literature. What does BioShock have to do with the Odyssey? How does Lovecraftian horror come about in S.O.M.A.? What insight can a Minotaur give us into Amnesia? Laila has answers to all of these questions–oh, and she’ll be talking about “daemonic warped spaces” and P.T., too.

Laila will be exploring how horror storytelling in video games fits into broader, long-standing traditions of horror in folklore, mythology, and literature. What does BioShock have to do with the Odyssey? How does Lovecraftian horror come about in S.O.M.A.? What insight can a Minotaur give us into Amnesia? Laila has answers to all of these questions–oh, and she’ll be talking about “daemonic warped spaces” and P.T., too.